Crawl optimization for news publishers (with Barry Adams)

Guest writer Barry Adams shares his considerable expertise on crawl budget, the Crawls Stats report, common errors and ways publisher can optimize crawl budget

#SPONSORED

Unlock the Power of Google Discover with GDDash.com: Boost Your Traffic Today!

Traditional tools don’t work for News SEO; they check rankings once every 3-5 days, don’t track trends, and don't track Top Stories. NewzDash was developed to fit News SEO needs. Find your true search visibility, uncover important stories and get instant recommendations. Sign up for a demo today.

Hello and welcome back. This week, we’re doing something special: We’re so thrilled to have Barry Adams join us as our first-ever guest writer!

Barry, author of the excellent SEO for Google News newsletter and co-founder of the NESS conference, shares a detailed look at crawl budget for news publishers. He covers:

What is a crawl budget?

The Crawl Stats report;

Common errors;

Optimizations for crawl budget.

Thanks to Barry for sharing his considerable expertise with us! Sign up for his newsletter.

Join our Slack community to chat SEO any time. We’re on Twitter, Facebook and Instagram, too.

Crawl optimization for news publishers

Crawling is the process search engines use to find new content to include in their search results. For most websites, how search engines crawl their pages isn’t something they need to worry too much about. However, because news publishers often have large and complicated websites, some effort needs to be made to ensure crawlers like Googlebot can easily find your content.

Optimizing your website for crawling allows Google to rapidly discover newly published articles. That means Google can quickly index these articles and start showing them in search results – including Top Stories carousels and other news-specific ranking features.

Publishers need to optimize for crawling because Googlebot doesn’t have unlimited capacity. Crawl budget refers to the total effort a search engine dedicates to crawling your website. It is inite and there are many ways to waste crawl budgets on URLs that don’t add any value to the website’s performance in search.

To optimize crawl budget for your website, you first need to see where that crawl budget is spent. The Crawl Stats report, available in Google Search Console, provides a wealth of information about Googlebot’s crawling of your site.

Let’s dig into that report:

Crawl Stats

This report in Search Console can be found under Settings on the left-hand side. On this Settings page, click the “Open Report” to see the Crawl Stats report. That will bring you to a dashboard that shows your website’s high-level crawl metrics:

Here, the most important metric is Average response time (ms). This metric shows how quickly your website serves URLs to Googlebot. Server response time should be fairly quick, because slow-responding websites are also crawled at a much lower rate.

There is a strong inverse correlation between response time and crawl requests; if the response time gets higher (so the website gets slower), the number of crawl requests gets lower. That’s because crawl budget is a time-based concept: Googlebot spends a set time on your site. The longer a website takes to respond, the fewer pages that will be crawled.

To optimize crawling, the first thing to do is improve your average response time. This is an easy recommendation to make, but a hard one to implement. Server response times depend on many different factors, all of which are technical, and many of which are not easily improved.

On the whole, response times are improved by aggressive server-side caching and increased server hosting capacity, as well as good use of CDNs to ensure Googlebot doesn’t have to travel the globe to crawl your site.

Pro tip: Googlebot primarily crawls from Google’s California-based data centres.

The exact target for response time is a matter for debate. Idealistic targets are 200ms or faster, but that is very hard to achieve for most websites. In my opinion, you should aim for a response time of 600ms or faster. Anything slower than 600ms is too slow and needs addressing.

Response codes

The next part of the Crawl Stats report to review is the response codes – the By response table:

This report shows how your website responds to Googlebot’s crawl requests. The first thing a website does when it is asked to serve a page is to respond with a HTTP status code. This status code tells Google (and web browsers) what kind of response it’s receiving.

Google has good documentation on HTTP status codes and how they are handled by their crawling and indexing systems.

Most of your HTTP status codes should be 200 (OK). If your site’s Crawl Stats report shows a large percentage of other responses – especially 301/302 redirects and 5XX server errors – it could indicate that your website has crawl optimization issues that need to be addressed.

Click on each row in this table to get a list of example URLs for each HTTP response, so you can see what the potentially problematic URLs are.

File types

Next, look at the By file type table. Here we see the types of files Googlebot is crawling on your website:

As publishers, most of the pages we want crawled, indexed and available in search results are article pages – available as HTML files – so we want HTML to be the most-requested file type.

If there is a lot of crawl effort spent on other file types – JavaScript, JSON, font files (found under the Other file type label) – it can mean that Google has less crawl budget available to crawl your site’s webpages.

Crawl purpose

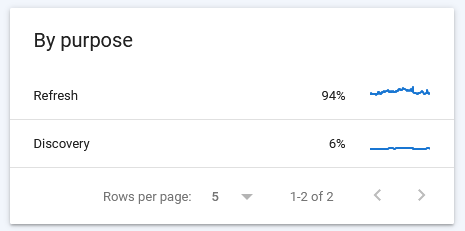

Next, consider the By purpose data. Here we see how much crawl effort is spent on known URLs that Google has re-crawled (Refresh) versus new URLs that Google is crawling for the very first time (Discovery):

The Refresh versus Discovery rate can vary between publishers, depending on how big their website is and how many new articles they publish daily.

For most established publishers, a Discovery rate of more than 10 per cent (and a Refresh rate of less than 90 per cent) is a cause for concern, as it means Googlebot is finding many new URLs to crawl. This can point to a crawl waste issue with URLs that perhaps Googlebot should not be crawling.

Click on either row in the table to get example URLs for both types of crawling.

Googlebot types

Finally, the Googlebot types report shows which types of Googlebot are crawling your website, and how much crawl budget each type consumes:

Googlebot has many different crawlers, each for a different purpose. Since Google has a mobile-first approach to crawling and indexing, the Smartphone type should be the most used. Google also checks website’s desktop content (to make sure it doesn’t deviate from what you serve to mobile users), so you tend to see the Desktop Googlebot type take up a decent chunk of crawl budget.

A common issue is a large amount of crawl effort spent on Page resource load, meaning Googlebot spends a lot of its time crawling page elements that are not HTML. These can be JavaScript files, JSON payloads, CSS files, and font files – all of which are required to show a webpage’s full visual appearance to users in a web browser.

To reduce the time Google spends on page resources, deliver lightweight webpages devoid of clutter. These pages load quicker, provide a better user experience and help optimize crawl budget.

Another potential issue is when AdsBot – the crawler used for dynamic search advertising campaigns – uses a large percentage of the crawl budget. AdsBot can sometimes run amok and consume your crawl budget. Check with your advertising people to make sure they tighten up their campaigns if you see this type consume a large percent of crawl budget.

Beyond Crawl Stats

However useful the Crawl Stats report is, it doesn’t paint the full picture. There can be other ways your website is wasting crawl budget.

Duplicate content problems: One common duplicate content issue is when a website accepts URLs with and without a slash at the end. For example, an article could have these two URLs:

When the website serves the same article on both of these URLs with a 200 OK HTTP status code, for Googlebot, this would just be two different 200 OK crawl requests. In the Crawl Stats report in Search Console, this would not be recognizable as a problem.

However, it might mean every page on your website has a duplicate version, which can be crawled. Googlebot might think your website could have twice the crawlable webpages.

There are simple ways to ensure that Google only picks one of these URLs for indexing purposes – with a canonical meta tag or with a 301 redirect from one version to the other – but these fixes only solve the problem of indexing. They do not address the underlying crawl waste problem.

Use an SEO crawler to crawl your website and find these issues. All SEO crawling tools will have built-in reports that show crawl waste issues relating to duplicate content, internal redirects, and other issues.

My favourite SEO crawler is Sitebulb, as it is probably the most advanced technical SEO crawler available. Screaming Frog, Lumar, Botify, JetOctopus and many others are good alternatives.

Questions about crawl optimization

Since crawl optimization is a broad topic, let me try to answer some of the most common questions:

When should I worry about crawl budget?

Most websites don’t need to worry about crawl budget. If your site has less than 100,000 URLs, it’s very unlikely you have issues with crawling.

Websites that have between 100,000 and 1 million URLs can suffer from some crawl budget issues that could be worth fixing.

If your website has more than a million URLs, you should definitely be looking at your crawl budget regularly to ensure there aren’t any major crawl waste issues.

How important is crawl budget for news publishers?

News publishers should worry more about crawl budgets than other, non-news websites. News is fast-moving; stories develop quickly, and people expect to see the latest news articles in Google News and Top Stories.

Publishers need to make sure articles can be crawled, and subsequently indexed, as soon as they’re published. Ensuring your website can be crawled efficiently and without any major crawl waste problems is a key aspect to successful SEO for news.

What about XML sitemaps?

While XML sitemaps are very useful and I’d always recommend having them, I don’t believe they are Google’s primary mechanism for finding new content.

Google will regularly check your sitemaps, especially your Google News sitemap, but crawling your website is the main method Google uses to find new articles. So your XML sitemaps don’t replace an efficiently crawlable website.

How should we use our robots.txt?

By default, Google will assume it can crawl every URL on your website. You can define so-called Disallow rules in your site’s robots.txt file, which tell Google what URLs it is not allowed to crawl. Googlebot, being a so-called polite crawler, will obey your disallow rules.

That means your robots.txt disallow rules are very potent and should be carefully considered. It’s easy to block Google from crawling pages on your website that you actually want it to crawl. You can test changes to your robots.txt file with Google’s robots.txt tester, which I’d always recommend before rolling out any changes to your live robots.txt file.

Keep in mind that a new robots.txt disallow rule does not mean crawl budget is sent to other areas of your website. Google has published a detailed guide to managing crawl budget, in which they say that when you disallow a section of your website, the crawl budget associated with that section is wasted – it basically evaporates:

“Don't use robots.txt to temporarily reallocate crawl budget for other pages; use robots.txt to block pages or resources that you don't want Google to crawl at all. Google won't shift this newly available crawl budget to other pages unless Google is already hitting your site's serving limit.”

After you’ve implemented a new robots.txt disallow rule, it can take weeks or months for Google to re-learn your website’s crawlable URLs and shift effort to pages it is allowed to access.

How do 404/410 errors affect crawling?

When your website has many 404 Not Found or 410 Gone HTTP status codes, it doesn’t actually impact crawl budget that much.

The moment Googlebot sees a 4XX status code of any type, it ends that crawl request and moves on to the next URL. It doesn’t waste any more time on that 404/410 or other 4XX page, so the amount of crawl budget wasted is minimal.

It’s still a good idea to periodically check your site for 404/410 errors, because internal links pointing to Not Found pages is a negative user experience. Plus, if there was previously an active page on a URL that now serves a 404, the SEO value of that page could be lost so you may want to implement a 301 redirect to a replacement page.

Do 301/302 redirects use crawl budget?

Yes. Contrary to 4XX status codes, Google does need to use some crawl effort to follow 301 and 302 redirects. So it’s an aspect of your site you want to minimise.

Redirects are still very important. When changing a URL, redirects pass along the SEO value to the new page. Redirects are necessary and unavoidable, but they shouldn’t consume too much crawl budget.

It’s worth regularly checking your site’s internal links (with a SEO crawler) to make sure they all point directly to the final destination URL without any redirect hops.

Learn more

There’s much more to learn about crawl optimization, and every website will have its own unique challenges when it comes to maximising Googlebot’s crawl efforts.

I mentioned some Google documentation before, which I’ll list again here as these are incredibly useful sources of information:

I hope this introduction to crawl optimization was useful and you found a few nuggets of insight to apply to your SEO efforts.

#SPONSORED - The Classifieds

Get your company in front of more than 9,000 writers, editors and digital marketers working in news and publishing. Book your 2024 spot in the WTF is SEO? newsletter now!

THE JOBS LIST

These are audience jobs in journalism. Want to include a position for promotion? Email us.

Search Engine Journal is hiring a Senior SEO Editorial Lead (United States).

RECOMMENDED READING

Google: Explore the world's searches with the new Google Trends

Aleyda Solis: Growing organic search visibility and traffic for products

Barry Schwartz: Google confirms February 2023 product reviews update has finished rolling out

Google Events: The next Google I/O event is scheduled for May 10, 2023.

Ahrefs: The 8 most important types of keywords and how to decide if (and how) to target a keyword

Shaking up search with Bing and AI: Expert talk and insights

Detailed: 16 companies dominate the world’s Google search result

National Park Service on Twitter: #SpringForward

Have something you’d like us to discuss? Send us a note on Twitter (Jessie or Shelby) or to our email: seoforjournalism@gmail.com.

Written by Jessie Willms and Shelby Blackley

I find it very interesting you mention the 'duplicate' URLs for stories - that's kind of baked in when we need to produce a normal web rendition plus the AMP rendition for the same article :(

Would crawl budgets basically be halved if we dropped AMP - a near and dear subject to your heart Barry?

,